Stop using AI as your therapist

It’s a real slippery slope when you start outsourcing your humanity

A recent survey by Sentio University has revealed that 48.7% respondents used AI, specifically Language Learning Models (LLM’s) like ChatGPT for therapy and mental health support.

Unsurprisingly, there’s been a huge backlash from the psychology and psychotherapy communities about the dangers of using a chatbot as your therapist.

Now, I have opinions on AI - some of which I will not get into here because as a creative, a climate-concious individual, and a person who needs to use AI to remain competitive in my career field, it’s complicated. However, while it’s one thing to leverage AI for shortcutting productive human output, it’s quite another to substitute it for humanity.

There are dozens of news articles here, here and here where psychologists have foretold the dangers of using AI for therapy but as a person who has tried a lot of different types of therapy, and also was in therapy for a long time, I think it’s worth adding my two cents; em-dashes and all.

Elephant in the room

Let’s start with the most obvious thing; the reason people are turning to AI in the first place. It’s because mental health support, especially in the US where the survey was conducted, is not very accessible.

For the UK at least, waiting lists for common NHS provided therapy models (usually 10 or so CBT or counselling sessions, either over the phone or in person) can be anything from 6 months to up to 1 year. Getting diagnosis for a condition like ADHD or autism, which are also closely related to a lot of mental health conditions, can also take up to 2 years.

I’ve also spoken before about how sometimes this particular therapeutic approach and process is not always successful if a patient has more complex, ongoing issues - namely because the sessions are not long enough, not specific enough, and not always consistent enough. Unless you’re at a point of crisis, in which a complete different process is actioned, getting help can feel impossible.

Going to a private therapist is again, another ball game. While mental health charities like Mind do offer support, the private option can set you back anything from £50 per session to £180 depending on the type of therapy and the expertise of the therapist.

Spaces are often limited and with more frequent ongoing sessions recommended, you can spend months to years in therapy footing such bills.

Then you have all the other stuff. Being allowed time off work to attend appointments, considering whether you gel with the therapist or type of therapy, the fact that appointments can be cancelled or delayed if something comes up, and of course, that you might need therapy again at different times in your life.

While there are Apps to support with mental wellbeing, usually these leave a lot to be desired and aren’t catered to your personal issues. Instead, like search engine results, they will give you more broad, general advice on coping strategies like breathing exercises, meditation techniques, and tips for stress management.

So turning to ChatGPT and the like, is a cheaper and more efficient option.

Touching grass

It has to be said that a large percentage of the people I know who fanboy/girl way too much over AI usually seem to need the most help functioning as a person.

Yes, it’s cool and it’s crazy how well the stuff it can do but I always find the mad variety of things humans can do way more interesting.

If you understand how AI works, how it can be leveraged and you have the skills to use it wisely, then it’s a great tool. If you think it can solve all your problems - especially your interpersonal ones, you need to go and touch some grass.

LLM’s are the new search engines, replacing Google etc. as a way to find information fast. You can craft an extremely specific prompt that will produce an answer pulled from a plethora of online resources to give you a clear, concise response you can iterate on further.

But this removes a step in how we humans interact with this information. Back in the days of SEO, we’d have to sift through pages search results to find the most relevant ones and then likely read through a few sources before we got the answer or could draw a corroborated conclusion. And as we know, the internet is rife with misinformation. If we remove our critical thinking and ‘vetting’ from the process, this creates a problem.

This is why seeking any advice online, be it legal, financial, health-related, or about relationships, comes with huge caveats. You ask a forum of strangers and they’ll give you scattered, biased and under-nuanced advice. Or you get a dozen websites, of varying quality and credibility, giving you wildly conflicting, factually questionable advice. Guess what LLM’s do?

A brain-scan study from MIT recently suggested that over using AI may suppress mental performance. It’s bad enough we’re getting lazy enough to let it do our thinking, but our feeling too?

Trusting these tools with the vulnerable and nuanced fragility of the human condition feels uncanny to me and frankly, a sad state of affairs.

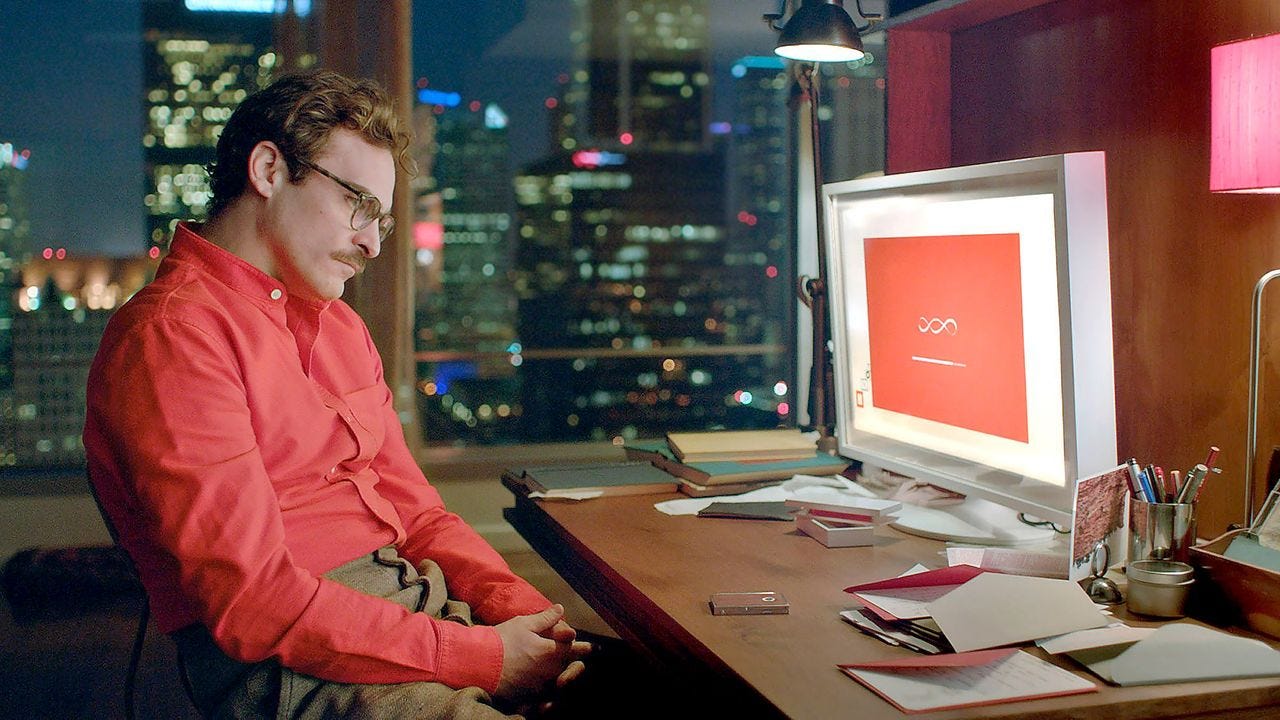

Artificial thinking, artificial feeling

In my desk research for this piece, I looked though threads of people who claimed that ChatGPT was better than any therapist they’d ever had. I found an article on Substack where a writer had used ChatGBT to ‘assess’ who was correct in an argument with their spouse - notably to seek some validation and feel understood. I spoke to people who confessed they often lean on chatbots to help them craft responses for conflict management at work and in their interpersonal relationships. The irony of learning how to have difficult, emotional conversations with people from a machine is not lost on me.

I also tried it out myself.

The output wasn’t terrible in all honesty. It expressed a sympathetic tone and used the ‘active listening ‘ (sometimes also called ‘reflective listening’) techniques therapists, mentors etc. often deploy. It also suggested some actions (which is a yes for Cognitive Behavioural Therapy approaches but a no-no for psychoanalytical methods) but nothing really in-depth.

All in all, it took me less than 5 minutes and gave some reasonable explanations for my symptoms but nothing else that deep. My expectations were low so I was pleasantly (?) surprised but I had to invent a fake story to feel remotely comfortable asking it to therapise me.

A fundamental of therapy is to build trust with your therapist so offloading into a small white text box felt bizarre.

Perhaps I’m too ‘in-the-know’ of what good therapy should look and feel like, but the output felt like a flat 2D substitute for the kind of support a friend, colleague, or family member might give, letalone a trained mental health professional. This raises the all important question of why people feel that a robot is genuinely a more adequate support network than other human beings?

Uncanny valley and why it’s weird

As much as I understand the reasons behind why people do it (access and costs for therapy, lack of support network, terror of being judged, exposed, or manipulated by another person) it concerns me how much trust vulnerable people are putting into a tool, that ultimately, can’t show you the care you need.

It goes without saying that LLM’s are programmed to learn based on the information they consume and how we interact with them. They do not (currently) draw from an internal well of emotions and memories, real, lived experiences, in-person communication, learning, teaching, or research nor can they properly read our physicality or social cues.

So while yes, they might give you an impartial, seemingly non-judgemental response within minutes, they lack the true empathy, insight, and partiality of a real therapist.

In the case of the writer arguing with her husband, ChatGPT also doesn’t have the historic background context of the people being referred to and it was analysing from screenshots of a text conversation. As we know, communicating online or over messages is ripe for misunderstanding.

LLM’s might have unlimited patience but they also fall short elsewhere.

There are some things that we just don’t feel we can tell our friends, partner, or family. Even written in a diary under lock and key, our deepest, darkest thoughts risk being discovered. A therapist removes that judgement and is bound by law to keep it confidential.

What you share with publicly owned chatbots is not private. Many studies have also suggested it’s not impartial; they are conditioned to give you favourable responses and tell you want you want to hear.

Therapists, by contrast, will not do that. They may not berate you - and of course, can only work with the version of information you’ve chosen to share - but a good therapist will rarely let you be passive in your own conflicts. They are not there to tell you that nothing is ever your fault.

This is the result of the resilience drought. Those that expect comfort cannot fathom those that continue in discomfort.

I mean, of course you’re lonely if you choose comfort over connection

This has partially been the problem with the misappropriation of ‘therapy speak’ in the online world. It removes people’s need for accountability and instead sees them weaponise concepts like boundaries and ‘protecting their peace’ to keep them in a perpetual state of victim hood. To throw AI into this mix feels dangerous.

But culture has also created a vacuum. The digital age loneliness epidemic is another reason why people turn to chatbots - because connecting with other people feels too hard, too complicated, and too laborious. This makes creating deep connections and building a support network impossible. Inadequate or lack of support network is often one of the key reasons why people turn to therapy in the first place.

I’m not going to be the bad guy for pointing out that while yes, therapy is a luxury not all of us can access, some of this problem lies in the fact that we just don’t want to talk to people anymore. Be they paid to listen or not.

We’re so uncomfortable with our own humanity, and how others respond to it with their own, that a sanitised response built on data, empty platitudes, and well-optimised resources which tells you that you’re never wrong is the only thing that feels ‘safe’.

It’s not because it’s genuinely better, it’s because it lacks the sheer messiness and ugliness involved with being human.

So don’t fall for it. I’m not sure I even believe in letting it diagnose more physical health issues either. If we let something non-human teach us how to be human, then we’re already disconnected from the cure.